Could we have a discussion about the best practices for running the plugin at the lowest latency possible ?

This is only my opinion so please take it with a serious grain of salt! When it comes to latency, this is something that has always been a major hurdle on computer recording and what most musicians view as a huge turn off and annoyance. I for one have been trying my best to avoid by spending a large sum of time and money on fast thunderbolt interfaces. Still, that does not save you when it comes to large buffer sizes, but at least it makes you more prepared to deal with them perhaps?

None the less, I see this plugin as a huge step forward. My first impressions are very good! I’m using this MIDI more as a layer on an already thick guitar sound, so it’s performing very good on the beta test run so far…

For sure. Since the days of Windows 98, everything has got a lot better, but we were, and still are forced to improve our workflow to avoid as much latency.

The first thing you can do is likely to use the built in instruments and then use that midi afterwards on a vst you want (especially if it is omnisphere or something.)

Try to commit to audio as much as possible, not overload your projects with too many fx etc. You can use busses and sends to reduce this. Nothing on the master channel.

I use midi guitar first in a project to skeleton out my midi notes. I very rarely use it later on in a project. However, when i do, i do the same as if i am say - recording vocals on a heavy ladened track. I bounce a version on the track (give or take a few layers for space.). I then go ham on a fresh project making new layers here until the cpu gets me, then i transfer those layers back to the main project.

Often times i will use a second computer running the instrument into my soundcard of main computer, and then i am comitted to audio straight away. Based upon how nifty you are with audio editing and the performance itself in comparison to midi editing, this is still the best option for me, and allows me to fundamentally use midi guitar as audio. Then i can delve a bit deeper into the sounds i want without too much built up lag as only 1 vst ever runs at a time. Bear in mind you can still record the midi and transfer it via usb. I used to use melodyne and transfer to midi but this program is way hotter at midi transcription.

Hello Jon, welcome here ![]()

Start by searching this forum for answers by typing “latency” in the search field, and you’ll find this, for example: Latency

There are many other interesting posts.

Secondly, we don’t know the characteristics of your equipment, especially your OS, so I can only add answers for Windows users.

Here is another post found with the search field: Midi guitar 2 awful latency(pc)

Furthermore, Windows being a “family” OS crammed with useless stuff, the aim is to remove many useless elements and to have a machine dedicated as much as possible to audio.

This will of course reduce latency and improve the performance of your machine, but above all it will ensure that you have a reliable and stable system.

Take a look at this guide to optimize Windows and, depending on your level of knowledge, apply the advice: The Ultimate Guide to Optimize your Windows PC

The reported latencies in the MG interface (wether MG2 or MG3) are just a simple calculation of the formula:

BufferSize / SampleRate * 1000

So with BufferSize = 256 and SampleRate = 44100:

256 / 44100 * 1000 = 5.8 ms.

The real latency, ie. from the onset of a note to a noteOn value is produced is far higher.

Thor, I’ll dig into this when I have more time, but I think lots of the latency you observe comes from the communication among the MG and Logic.

You are trying to measure Logic Audio => MG AU => MG3 Tracking => MacOS Virtual MIDI => Logic MIDI Input => Logics audio engine (plus maybe => Some Synth in Logic). Each step has latency, and Logic may schedule this chain as it wants, but only in large latency chunks with MG running at 44.1Khz 256 buffers. We aren’t yet using Apples “Audio Workgroups” that might help Logic schedule processing.

The MG tracking doesn’t add as much as you think, but I’ll have to make tests when things cool down here (and a week of sleep).

If you really want to do those measurements with Logic, you should instead run Logic Audio => MG Audio => Logic Audio, where MG Audio is made by 2 MG Chains, one direct guitar and another a snappy synth. Then in Logic you my be able to observe the two peaks and measure the difference.

But even then, you you may get better results with the MG standalone.

Sorry dont have time now.

One of the most important factor to determine your latency (among others mentioned here) is the soundcard and drivers involved that you use.

Some brands (RME is the best in this area) have a stable an low latency performance, so you can use your system at very low latency and great stability.

A good reference about how different soundcards perform is here: https://gearspace.com/board/music-computers/618474-audio-interface-low-latency-performance-data-base.html

With others brands there can be more or less problems, extra buffers, more latency than the one system says, etc.

So, for me, the most important is to have a good computer and a good sound card.

I totally appreciate the amount of questions and fixes you need to deal with right now, so feel no rush to elaborate on this.

In the past I have done different types of testing:

- MG2 running as AU plugin in Logic and Ableton Live

- MG2 running as standalone sending midi over the IAC bus to Logic and Ableton Live

- MG2 running as standalone sending midi over the IAC bus to SuperCollider

I was not using synths in Logic or Ableton, just midi and a buffersize matching that of MG2:

Buffer of 64 samples, samplerate of 48000.

The best results (as I expected) came from using MG2 in standalone sending midi to SuperCollider. I will do some more rigorous testing and report back.

I just now tested the speed of the IAC bus by sending midiout from SuperCollider to the IAC bus and receiving midi from the IAC bus, also in SuperCollider. The latency of the IAC bus seems to be very low, mean value = 0.25 ms, worst case = 0.33 ms.

Same test sending midi through Logic Virtual Out with Logic in the background and an external midi track enabled produces similar results, mean = 0.34 ms, worst case = 0.48 ms.

( based on sending 100 noteOns with a spacing of 0.2 seconds)

My comment only concerns Windows:

I did some comparative tests in several situations using:

- the same programs and plugins in Windows 11

- with either an RME interface or a cheap Steinberg UR22

- on a recent 2022 PC i7 12700H 32GB RAM

- on an old 2012 PC i5 3320M 8GB RAM

In all same cases, RME has slightly less latency than Steinberg.

In one specific case, the old PC with the Steinberg + Windows fully optimised for audio has less latency than the recent PC with RME + non-optimised Windows PC.

This is due to the fact that, with non-optimised Windows, the impressive number of unnecessary interruptions from sub-programmes, background tasks, system routines, etc, will lead to excessive consumption of processor cycles, resulting in audio crackles that need to be compensated for by increasing the buffer size.

The result is higher latency.

I appreciate everyone adding to this discussion ![]() I agree that the computer and interface are the most important pieces to latency. I use a UA Apollo x8 + Mac mini M2 - which I believe would be as fast as all the systems mentioned above.

I agree that the computer and interface are the most important pieces to latency. I use a UA Apollo x8 + Mac mini M2 - which I believe would be as fast as all the systems mentioned above.

My concern for the VST is it’s “playability” which is basically how quickly your guitar notes generate sounds. What is the time from note on the fretboard to note on the synth .

I always use a DAW and 3rd party synths as that is what is most useful to me. If this plugin is a replacement for you just playing a synth with a keyboard.

From my testing I have determined the following …

Latency zones

Above 25ms - generally noticeable - pads non-rhythmic sounds only

15-25ms - playable on rhythm but other band members will complain

15-10ms - noticeable latency , but usable in a performance context

5-10ms - barely noticeable range

Sub 5ms - not noticeable latency

What I have also noticed …

Midi2Guitar2 - runs at lowest latency when DAW is set to

96k + 128 or less buffer

Anything above 128 buffer will put you into the overly noticeable range.

48k is more noticeable than 96k

Would the plungin run best at 192/32 ? Potentially

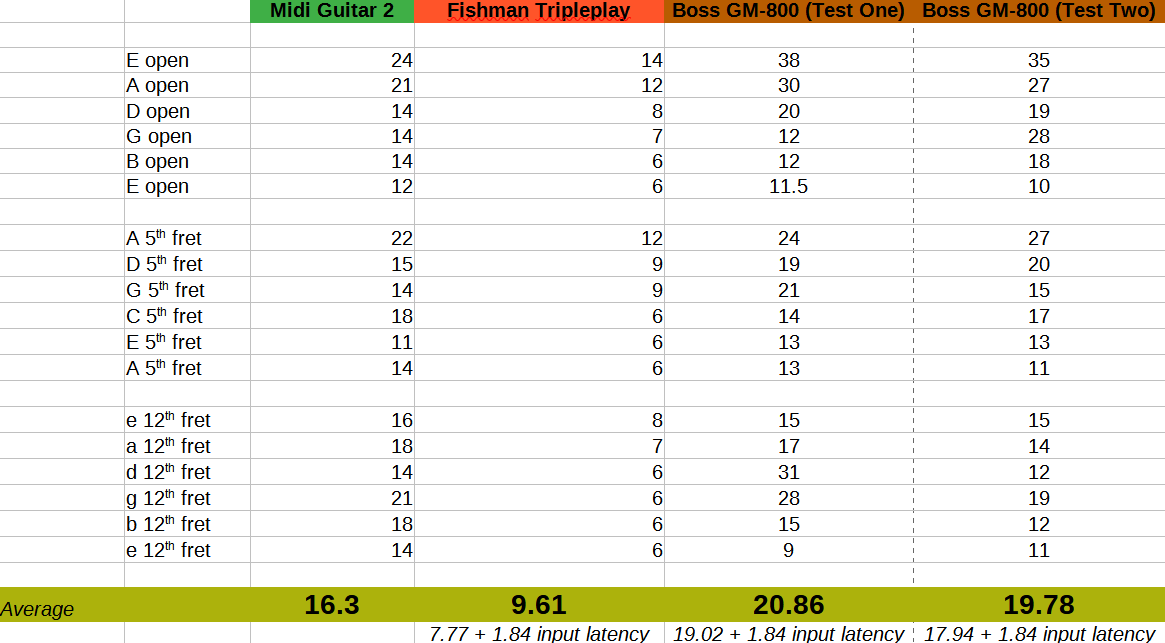

See below for a test of 18 values (open strings, strings at fifth fret and strings at twelfth fret) in MG2 mono mode (fastest conversion speed). This is the midi conversion speed (the time from guitar audio to production of a midi note). The average was 16.3 ms.

Add to this your audio interface roundtrip latency (input plus output latency) the latency of your vst synth and you arrive at your total latency. So if your audio interface rountrip latency is 8 ms. and your software plugin latency is 3 ms, the average latency would be 27.3 ms. If using monitors and not headphones add 1 ms. per foot for distance between monitor and your ears.

I expect with Poly mode, you would be in the 40 ms. average territory. What it is for MG3, I don’t know. Looking forward to seeing the results.

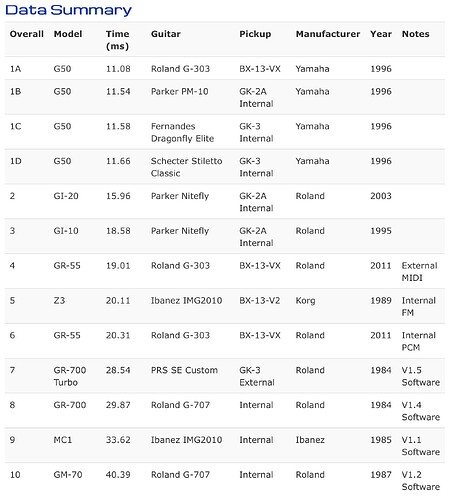

You can compare this with midi synths/converters of the past (the G50 used Fishman Tripleplay style conversion methods).

@lpspecial - thanks for the detailed testing results. I just did some testing with MG2 vs. MG3 showing that MG3 lags behind MG2 in approx. 6 - 13 ms. The test was done both with standalone sending to SuperCollider and in Ableton Live, where I first recorded some guitar and the subsequently converted it to midi with MG2 and MG3. Ableton sample rate at 44100 and buffer size at 256. The false positives where different but not in way that immediately suggested MG3 is overall better in this regard. I hope some of these issues will be worked out.

I did some more testing and I am pleased to say I exaggerated the overall latency of MG2 and MG3. I tested standalone vs. AU plugin in Ableton Live, same results pretty much. The average latency for MG2 seems to be about 20-25 ms pretty much regardless of buffer size. Also, a smaller buffer size doesn’t alway result in faster detection and the quality of the detection is also not conclusively better for any one option. The difference in latency between different buffer sizes are within 2 ms. I suspect that the buffer size refers to window size of the fft analysis in some way, so it is not so surprising that one buffer size is not always better than another.

MG3 lacks behind MG2 a bit in tracking speed, anywhere from 1 to 13 ms in my test, never faster than MG2. The false positives are different but the amount of bogus notes are about the same. There was at least one instance where MG3 was better at detecting the stopping of a note in legato playing, where MG2 extended the note for too long.

The internal gain structure of MG3 must be very different from MG2 - I had to lower the volume of my test file 17dB to get matching velocities. I will write more about this in another post, but in short I would much prefer if the downscaling of the signal happened inside MG3 instead of me having to lower my input volume on my interface to a degree where recording the direct guitar input becomes extremely quiet.

I know work will be put into lowering the latency so hopefully the MG3 performance will be improved over the beta test period. A lot of nice new features in MG3.

That is what I was comparing, distance from audio note to midinote for both softwares, and then I was also just comparing MG2 at different buffer sizes. No synths were running, just pure conversion.

A quick test shows that tracking speed for legacy 1.0 and MPE are virtual the same (within about 1 ms) and that monophonic tracking (wether MPE or Legacy MIDI) is pretty consistently 6 ms faster than polyphonic tracking.

I have MG2 set to monophonic for sax or any monophonic instrument. (Guitar solos?)

Latency for 32nd notes above 150bpm isn’t great on my old laptop.

So, for MG3 beta test users, a test for latency could be 16th notes at 300bpm or 32nd notes at 150bpm?

(or 8th notes at 600bpm, just for a test, eek)

Im a guitar player , so I lack the formal conventions and scientific rigor that many of you are bringing to this subject . I appreciate that. From my perspective , a faster sample rate , 96k specifically , creates a much more playable MG2 experience. Is there a reason for this , and should this be part of the best practice for lowering latency ?

Yes, in general higher sampleRate is the best way to enhance performance when it comes to spectral analysis. I really hope MG3 will support sampleRates of 88200 and 96000. I have never really used MG2 with sample rates over 48000, are you sure you are getting better tracking this way? I just did a quick test - first recording a 48000 kHz file and running it through MG2, then converting the file to 96000 and running the detection again with sampleRate set to 96000. Surprisingly the 96000 adds more latency, maybe because MG2 doesn’t support sampleRates of above 48000 and the plug thus has to downsample the signal in real time, not sure.

Yes that is true. I tested with my Apollo Solo interface which has very low latency at any sampleRate. With a good ‘no latency’ interface the difference in conversion speed across different sampleRates is very small compared to the overall latency of MG. With a cheaper interface and I think especially on a Windows computer, this might be a different story. Windows audio handling is much more prone to errors than OSX which is why people using Windows computers for audio performance usually go through a great deal of trouble optimizing the computer for real time audio, something you don’t really need to do on a mac.

As a practical test for MG3 beta testers:

Play 16th notes at 300bpm or 32nd notes at 150bpm.

The response ‘Latency’ from your speakers, should not be perceivable.

( Roughly about 1 millisecond per foot (30cm) away from the speaker.)